Guide

System Analysis and Design: Tutorial & Best Practices

Table of Contents

System analysis and design (SAD) is a comprehensive approach to developing or improving effective information systems within organizations. It involves a structured process of examining existing systems, identifying areas for improvement, and designing and deploying new or enhanced systems to meet business objectives.

This discipline combines computer science, information technology, and business management principles to bridge the gap between an organization’s operational needs and technological capabilities. This article will explore key aspects of system analysis and design, providing practical recommendations to help your organization streamline these processes and ultimately enhance the reliability, performance, and scalability of your system.

Summary of system analysis and design concepts

The table below provides a summary of the concepts covered in this article.

| Concept | Description |

|---|---|

| Defining a system | A system comprises interconnected components like databases, servers, networking infrastructure, and user interfaces. |

| Foundations of system analysis and design | The core principles and methodologies that underpin SAD include an iterative process of understanding and documenting system requirements, ensuring stakeholder engagement, and using tools and techniques for conceptualizing and planning system architectures. |

| System analysis phases and steps | Effective system analysis involves a systematic process that starts with requirements gathering, followed by objective setting, system examination, and feature design for the proposed solution, culminating in a detailed implementation plan. |

| System analysis and design patterns | Employing established design patterns in system analysis and design—such as the Ambassador, Circuit Breaker, and Publisher/Subscriber patterns—can streamline development processes, enhance maintainability, and help tackle complex design challenges. |

| Architecture evaluation | ATAM and SAAM critically support engineers in assessing system architecture to ensure that it meets project objectives and adheres to best practices. |

| The CAP theorem | The CAP theorem highlights the tradeoffs in distributed systems among consistency, availability, and partition tolerance. |

| Performance and scalability in distributed systems | Load-balancing strategies and caching mechanisms are best practices that significantly improve distributed systems’ performance and scalability. |

| Best practices in system analysis | Effective practices for system analysis include defining precise requirements and seeking stakeholder feedback. |

| Future SAD trends | Emerging technologies like AI-driven analysis tools, cloud-based design platforms, and DevOps integration tools are reshaping the landscape of system analysis and design. |

Defining a system

A system is a collection of interconnected components that work together to achieve a specific objective. Systems in information technology are composed of various elements, such as databases, servers, networking infrastructure devices, user interfaces, and software applications. SAD involves the analysis and design of these components and how they process data, store information, and facilitate organizational communication and decision-making.

The foundations of system analysis and design

Effective system analysis and design (SAD) builds upon a foundation of core principles and methodologies that ensure that systems meet functional and nonfunctional requirements. By integrating these principles into the software development lifecycle, SAD helps create systems that are robust, scalable, and aligned with long-term strategic goals.

Iteration

System requirements and solutions are continually refined, developed, and improved incrementally. This iterative process is crucial during the initial phases of system design and throughout the software development lifecycle as system designs are maintained and enhanced.

Stakeholder engagement

Effective system design relies heavily on the active participation of all relevant stakeholders—including end-users, managers, and cross-departmental teams. It also requires feedback from these groups. This engagement is essential for aligning the system with business needs and meeting user expectations.

Documentation and tooling

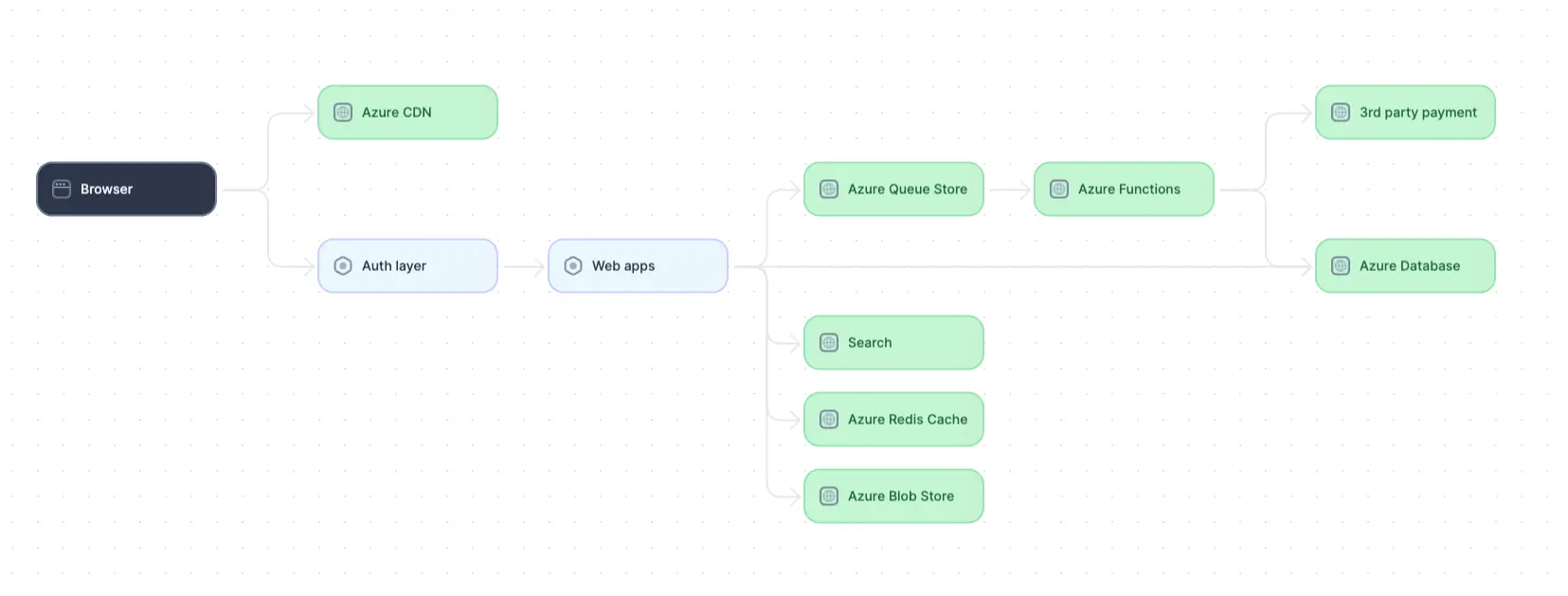

SAD employs various tools and techniques to conceptualize, plan, and document system architectures. Tools such as system architecture diagrams, data flow diagrams, use cases, jobs-to-be-done frameworks, and requirement specifications are effective for visualizing and documenting the system’s components, processes, and interactions. These artifacts engage stakeholders and enable precise management of project deliverables across the project’s iterative lifecycle.

A sample system architecture diagram (adapted from source)

This has traditionally been done using static diagrams and other forms of documentation. However, this approach creates several problems: diagrams quickly become outdated as systems evolve, important nuances of runtime behavior are often missing, and context about user interactions or cross-service dependencies is lost.

To address these issues, a new generation of tooling has evolved to automatically document system architectures, document integrations using executable code and API blocks, capture full-stack session recordings for end-to-end context, and feed these insights to AI tools for better-informed suggestions on feature development, debugging, and architectural improvements.

Architecture evaluation

Maintaining up-to-date documentation and understanding real system behavior also supports ongoing architecture evaluation throughout the SAD process. Once a baseline architecture is established, teams can continuously compare design intent with actual system behavior, identify deviations or bottlenecks, and assess the impact of proposed changes to make sure the system continues to align with KPIs and other core requirements.

Quality metrics and standards

Establishing clear, measurable quality goals and requirements is fundamental to practical architecture evaluation. By quantifying the system’s performance, reliability, and maintainability (among other quality attributes), SAD helps turn subjective judgments into objective assessments. Examples include SLAs, uptime percentage expectations (e.g., 99.999%), and end-user time savings (e.g., four hours per day per user). This approach helps ensure that the system delivers on its goals and maintains its quality as it develops and matures.

Evaluating architecture throughout the lifecycle

In addition to traditional stakeholder reviews, regular architecture assessments using methods like performance and load testing, simulations, prototyping, monitoring, and analytics platforms help ensure that the architecture meets functional and quality requirements. Metrics such as bug counts, code release velocity, developer turnover, and the scope of technical debt over time help track and enhance architectural quality.

Full-stack session recording

Learn moreSystem analysis phases and steps

Some approaches to system analysis entail lengthy and involved phases and steps, each of which are characterized by a specific objective and result in specific documentation output.

However, system analysis can be conducted with significantly less effort and overhead by following these two best practices:

- Keep the process lean, iterative, and embedded into the Software Development Life Cycle (SDLC). By adopting upfront and continuous system design reviews, an organization achieves these benefits:

- Promotes more inclusive team discussions on system architecture, ensuring full alignment and visibility on potential downstream consequences of new features and changes to the system.

- Empowers developers to align their day-to-day work with the project's long-term objectives, reducing the accumulation of architectural technical debt.

- Ensures better knowledge sharing, allowing more team members to understand and contribute to the system architecture and all related system information documentation.

- Leverage tooling that provides accurate and current information regarding your system architecture. Rather than engaging in a lengthy discovery phase, developers can use tooling to produce and maintain reference documentation that reflects the current state of the system. This allows them to quickly and effortlessly identify areas for improvement and determine whether the system’s design, functionality, and requirements contribute to the project's success.

Throughout the process of system analysis, the goals to keep in mind are:

- Requirements gathering: This involves gathering and documenting the system’s requirements from various stakeholders to understand the organization’s goals, identify pain points in existing systems, and capture functional and nonfunctional requirements for the proposed solution.

- Objective setting: Clear objectives for the new or enhanced system are defined based on the gathered requirements. For example, at X (formerly Twitter), the main objective may be to facilitate user engagement and interaction. Posting/sharing tweets, video uploads, and account management are features that serve the primary objective. Objectives can be recorded using multiple approaches, such as using architectural decision records (ADRs), service-level objectives (SLOs), or key performance indicators (KPIs).

- System examination: Upfront and continuous reviews of the existing system before beginning development allow engineers to identify its strengths, weaknesses, and potential areas for improvement.

- Feature design: The design phase begins when the system’s requirements and objectives are well understood. It involves conceptualizing the system’s architecture, defining its components, and communicating the proposed solution with all relevant stakeholders to ensure all requirements are being met.

- Solution implementation: Teams may decide to outline their solution implementation through a plan that includes the resources required, timelines, budgets, and strategies for testing, deployment, and user training.

Lightweight and continuous system analysis helps build scalable and maintainable applications by ensuring that comprehensive thought has gone into their design and implementation and that teams have a full understanding of how the software system works and can be evolved.

System analysis and design patterns

Employing established system analysis and design patterns can significantly streamline both processes, leading to more robust and maintainable systems. Design patterns offer proven solutions to common software problems.

For example, the Ambassador design pattern utilizes a dedicated component (the ambassador) as a mediator to facilitate communication between services and improve the system’s resilience by implementing retry mechanisms and circuit breakers. The ambassador component can also be used to handle tasks like logging, monitoring, and handling retries, allowing each individual service to focus on its core business logic.

The Circuit Breaker pattern improves fault tolerance and resilience by preventing failures from cascading through the system. The circuit breaker monitors the number of failures within a given time frame. If the number of failures exceeds a predefined threshold, the circuit breaker redirects requests to a fallback mechanism for a specified timeout period before allowing requests to reach the system once again. This prevents the system from being overwhelmed by a high volume of failed requests.

The Publisher/Subscriber (Pub/Sub) pattern facilitates asynchronous communication between system components by decoupling message producers (publishers) from message consumers (subscribers). Publishers’ messages are routed and delivered to the appropriate subscribers via a message broker. This pattern allows for better scalability, flexibility, and modularity within distributed systems.

Other important design patterns include Command Query Responsibility Segregation (CQRS), Event Sourcing, Leader Election, and Sharding. Integrating these design patterns into the planning and implementation phases of system development not only promotes code reuse and principles of good design but also aids in addressing the intricate scenarios often encountered during system analysis.

Interact with full-stack session recordings to appreciate how they can help with debugging

EXPLORE THE SANDBOX(NO FORMS)

Architecture evaluation

The structured evaluation of a proposed system architecture ensures that it meets project objectives and follows best practices. A structured methodology also helps ensure that proposed solutions meet stakeholder needs and follow technical best practices. Several structured techniques exist to support this evaluation process, including the ones described below.

Architecture Tradeoff Analysis Method (ATAM)

ATAM, developed by the Software Engineering Institute (SEI) at Carnegie Mellon University, uses a structured approach to identifying and mitigating risks associated with the proposed architecture. ATAM aims to be a stakeholder-centric process where key stakeholders actively identify and prioritize quality attribute requirements and analyze tradeoffs among them.

For example, at X (formerly Twitter), ATAM could be used to evaluate the tradeoffs between increasing real-time tweet delivery performance and maintaining system reliability during high-traffic events like the Super Bowl.

Software Architecture Analysis Method (SAAM)

SAAM is another SEI-developed method that evaluates the system architecture’s ability to meet specific quality attributes, such as performance, scalability, and maintainability. It follows a scenario-based approach where stakeholders define specific scenarios that represent the system’s quality attribute requirements, and these scenarios help evaluate the architecture.

Using SAAM, X (formerly Twitter) might analyze how well the proposed changes to its architecture can handle a surge in user load during global events, ensuring scalability and maintainability without compromising the user experience.

Other software architecture analysis methods

Various methods, such as the Cost-Benefit Analysis Method (CBAM) and the Analytic Hierarchy Process (AHP), provide frameworks for assessing architectural design from different perspectives. CBAM analyzes the costs and benefits of architectural decisions. AHP helps prioritize and quantitatively evaluate the tradeoffs among competing system quality attributes by structuring them into a hierarchical framework.

X (formerly Twitter) could apply CBAM to determine the financial viability of migrating its data storage solutions to a more scalable cloud service, weighing the initial investment costs against the long-term benefits of reduced downtime and enhanced data processing capabilities.

X (formerly Twitter) might employ AHP to prioritize various system enhancement choices, such as assessing improved data security versus enhanced user interface features, by structuring these decisions into a hierarchy and quantitatively assessing their impacts on overall business objectives.

The CAP theorem

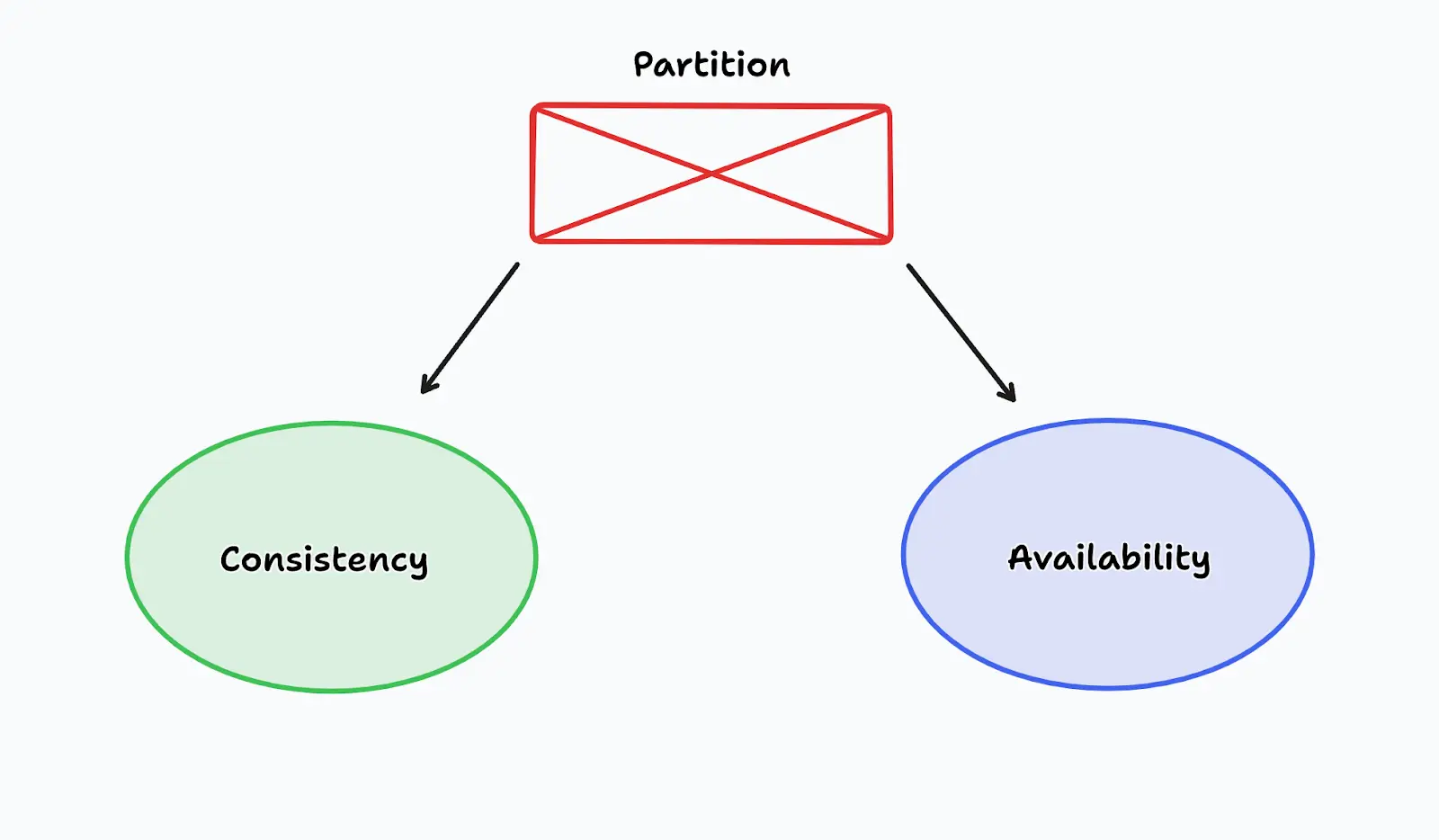

The CAP theorem, also known as Brewer’s theorem, is a fundamental principle in the design of distributed systems. It addresses three characteristics—consistency, availability, and partition tolerance—of a system’s operation in a distributed environment.

- Consistency: refers to how data is replicated across different system nodes. Within a system that guarantees consistency, all clients will see the same data at once, regardless of which node they connect to. This means each data write must be instantly propagated across all system nodes.

- Availability: refers to the ability of the system to maintain operations (i.e., continue to return valid responses to client requests) even if one or more system nodes are down.

- Partition tolerance: refers to the system’s ability to function in the face of disrupted communications (“partitions”) between nodes.

A critical insight of the CAP theorem is that while partition tolerance is non-negotiable in any distributed system—since network failures are inevitable— system designers must strategically choose between consistency and availability when partitions occur. This tradeoff requires careful consideration.

The tradeoff between consistency and availability in the face of network partitions

For instance, a banking application might prioritize consistency over availability to prevent discrepancies in account balances, even if it means slowing down the system or temporarily denying service during a network partition. Conversely, a social networking platform like X (Twitter) may prioritize availability to ensure that users can always post and interact, even if some users see slightly out-of-date data during a network partition.

One click. Full-stack visibility. All the data you need correlated in one session

RECORD A SESSION FOR FREEPractical implications

Understanding and applying the CAP theorem involves more than just theoretical knowledge—it requires practical decision-making that aligns with business objectives. System architects must evaluate their system’s needs and constraints to determine the right balance among these properties. The choice fundamentally impacts the system’s architecture and can influence everything from user experience to system reliability.

Performance and scalability in distributed systems

In the context of large-scale, distributed systems, ensuring high performance and scalability is a critical concern. Several best practices and techniques are employed to achieve these goals:

- Load balancing: Load balancing strategies distribute incoming requests across multiple servers or nodes, preventing any single component from becoming overwhelmed while improving overall system performance.

- Caching: Caching mechanisms store frequently accessed data or resources in memory or at strategic locations within the system, reducing the need for repeated computations or remote data fetches and improving response times.

- Partitioning and sharding: Partitioning and sharding techniques involve dividing data or workloads across multiple nodes or databases, allowing for parallel processing and improved scalability.

- Asynchronous processing: Implementing asynchronous processing patterns like message queues and event-driven architectures can help decouple system components and improve overall throughput and responsiveness.

Another critical skill in system analysis is identifying areas in a system that will benefit from these techniques. Performance and scalability are achieved by carefully examining system limitations and strategically implementing these concepts.

Best practices in system analysis

Effective system analysis requires adherence to several best practices to ensure a comprehensive and successful outcome:

- Define requirements clearly: Clearly defining and documenting system requirements is crucial for ensuring that the proposed solution meets the organization’s needs and expectations.

- Ensure stakeholder engagement: Actively involving stakeholders throughout the analysis process helps identify potential issues, enables the gathering of diverse perspectives, and ensures buy-in from all parties involved.

- Take an iterative and agile approach: Adopting an iterative and agile approach to system analysis allows for continuous feedback, adaptation, and refinement of requirements and solutions.

- Using prototyping and proofs of concept: Building prototypes and proof-of-concept implementations can help validate design decisions, identify potential issues, and gather user feedback early in the process.

- Engage in risk management: Identifying and mitigating potential technical, financial, or organizational risks is essential for ensuring the successful implementation of the proposed system.

- Emphasize documentation and communication: Effective communication and thorough documentation throughout system analysis and design help ensure stakeholder alignment and the preservation of project knowledge.

These best practices help focus system analysis on the ultimate goal of building effective information systems. System analysis can produce successful outcomes that mitigate risk and deliver on objectives by working effectively with stakeholders and having a clear, collective vision.

Stop coaxing your copilot. Feed it correlated session data that’s enriched and AI-ready.

START FOR FREEFuture trends in system analysis and design (SAD)

The field of system analysis and design constantly evolves, driven by technological advances and shifting business needs. Several emerging trends are reshaping the landscape of SAD:

- Low-code/no-code platforms: As low-code and no-code development platforms become more popular, non-technical team members are able to participate in system design and application development, potentially changing the traditional role of system analysts and designers.

- The Internet of Things (IoT) and edge computing: The widespread use of IoT devices and the rise of edge computing are introducing new challenges and considerations for system analysis and design, requiring architects to account for distributed data processing, real-time data streams, and resource-constrained devices.

- Integrated runtime observability: Tools like Multiplayer have emerged to capture full-stack session recordings, telemetry, and real-time interactions and provide developers with a new level of insight into system behavior. Engineers can correlate user interactions with backend behavior and understand how requests flow through distributed systems without hunting through scattered APM data.

- AI-driven analysis: AI coding assistants are now an industry standard for suggesting code changes, identifying potential bugs, and even recommending architectural improvements. Although they often lack the full context of how a system behaves in production, developers can now feed AI assistants data from session recordings to provide them with runtime context for more accurate and actionable suggestions for new features, optimizations, debugging, and architecture decisions.

Together, these trends point toward a future in which the system analysis and design process is more collaborative, data-driven, and context-aware.

Conclusion

A comprehensive and well-structured approach to system analysis and design leads to technical systems that meet business needs and gracefully manage change. Systems can be successfully designed and analyzed by consistently planning for the future and merging computer science, IT, and business KPI knowledge. In doing so, developers and business professionals create software solutions that meet modern systems’ demands and evolving requirements.