6 Best Practices for Backend Design in Distributed System

Most modern software systems are distributed systems, but designing a distributed system isn’t easy. Here are six best practices to get you started.

Most modern software systems are distributed systems. Nowadays they are a must for various reasons, among which are:

(a) the fact that data, request volume, or both are frequently too large to be handled by a single machine,

(b) products are often deployed in multiple locations, and

(c) all services need to be scalable (just think of how ‘impactful’ a simple Google search can be: it could touch at least 50+ separate services and thousands of machines!).

Designing a distributed system, however, isn’t easy. There are so many areas to master: communication, security, reliability, concurrency, and so many more.

Here are six best practices to get you started:

(1) Design for failure

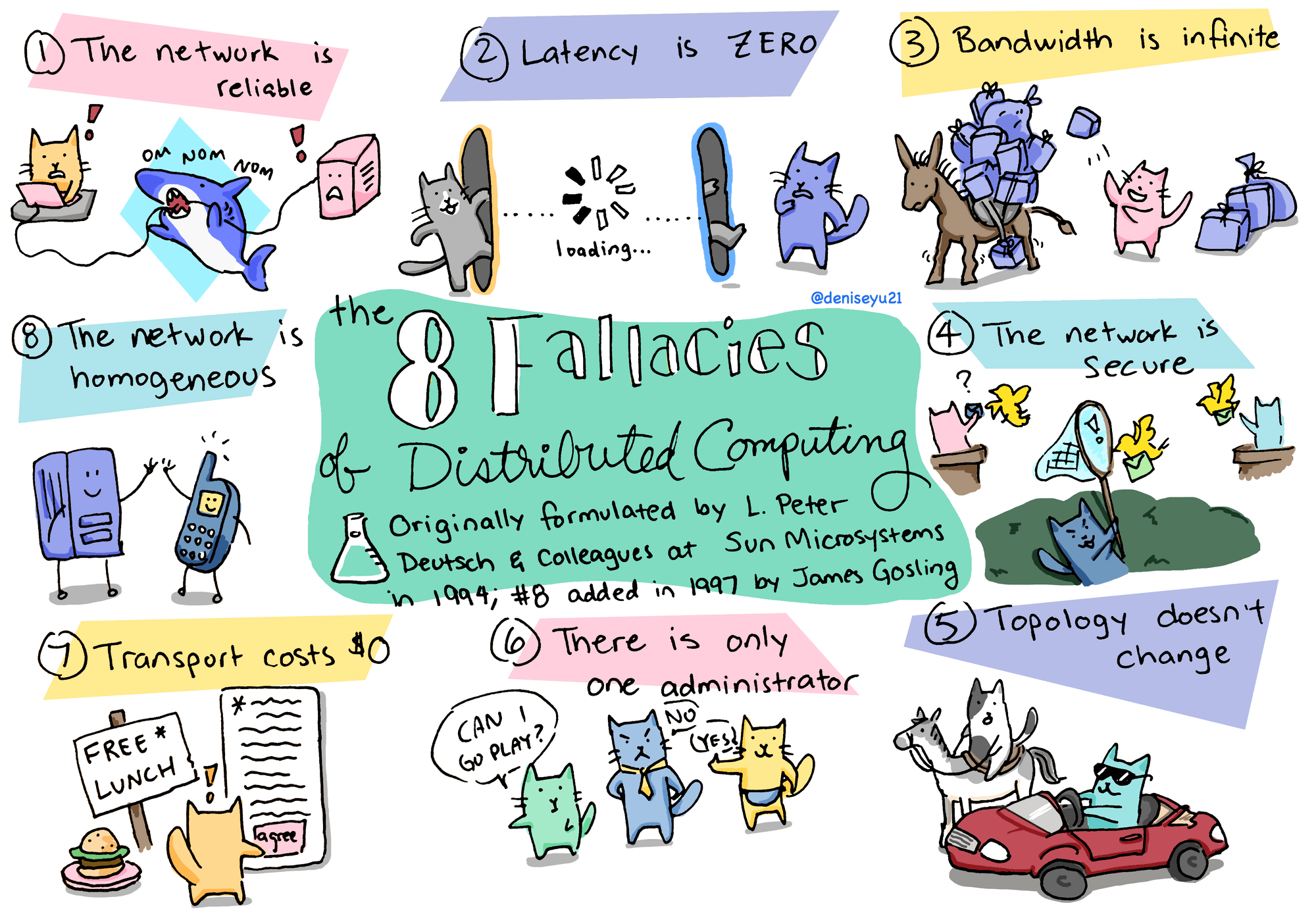

Failure is inevitable in distributed systems. Most of us are familiar with the 8 fallacies of distributed computing. We know about switches that go down, garbage collection that pauses and makes leaders “disappear,” socket writes that seem to succeed but have actually failed on some machines, a slow disk drive on one machine that causes a communication protocol in the whole cluster to crawl, etc.

Google Fellow, Jeff Dean, lists among the Joys of Real Hardware that in a typical year, a cluster will experience ~20 rack failures, ~8 network maintenance, ~1 PDU failure, etc.

Therefore, it's important to design the system for graceful degradation, build-in redundancy, and fault tolerance (e.g. using redundant hardware, load balancing, data replication, auto-scaling, and failover systems).

(2) Choose your consistency and availability models

Generally, in a distributed system, locks are impractical to implement and difficult to scale. As a result, you’ll need to make trade-offs between the consistency and availability of data. In many cases, availability can be prioritized and consistency guarantees weakened to eventual consistency, with data structures such as CRDTs (Conflict-free Replicated Data Types).

Two tips:

- Pay attention to data consistency: When researching which consistency model is appropriate for your system (and how to design the system to handle conflicts and inconsistencies), we recommend reviewing resources like The Byzantine Generals Problem and In Search of an Understandable Consensus Algorithm.

- Strive for at least partial availability: You want the ability to return some results even when parts of your system are failing. The CAP theorem is well-suited for critiquing a distributed system design, and understanding what trade-offs need to be made, although, please note that out of C, A, and P, you can’t choose CA.

(3) Build on a solid foundation from the start

Whether you’re a pre-seed startup working on your first product, or an enterprise company releasing a new feature, you want to assume success for your project.

This means you want to choose the technologies, architecture, and protocols that will best serve your final product and set you up for scale.

A little work upfront in these areas will lead to more speed down the line:

- Security - Minimize your blast radius in case of security breaches by ensuring that communication between services is secure, data is encrypted in transit and at rest, and access controls are in place to prevent unauthorized access to sensitive data.

- Containers - Containers provide a consistent runtime environment, regardless of the host operating system or infrastructure. They can be easily scaled and provide isolation between applications and their dependencies.

- Orchestration - Reduce the operational overhead and automate many of the tasks involved in managing containerized applications.

- Infrastructure as code - Define infrastructure resources in a consistent and repeatable way, reducing the risk of configuration errors and ensuring that infrastructure is always in a known state.

- Standard communication protocols - REST, gRPC, etc. can help to simplify communication between the different components and improve compatibility and interoperability.

(4) Minimize dependencies

If the goal is to have a system that is resilient, scalable, and fault-tolerant, then you need to consider reducing dependencies with a combination of architectural, infrastructure, and communication patterns.

- Service Decomposition: With this strategy, each service should be responsible for a specific business capability, and they should communicate with each other using well-defined APIs. However, monolith vs microservices is a difficult decision even for Amazon!

- Organization of code: Choosing between a monorepo or polyrepo depends on your project requirements, however, a polyrepo is better suited for larger projects where the priority is optimizing the management of dependencies and build times.

- Service Mesh: Having a dedicated infrastructure layer for managing service-to-service communication provides a uniform way of handling traffic between services, including routing, load balancing, service discovery, and fault tolerance.

- Asynchronous Communication: By using patterns like message queues, you can decouple services from one another.

(5) Monitor and measure system performance

In a distributed system, it can be difficult to identify the root cause of performance issues, especially when there are a number of systems involved.

Any developer can attest that “it’s slow” is and will be one of the hardest problems you’ll ever debug!

Application Performance Monitoring (APM) is the process of monitoring, analyzing, and reporting on how applications are performing in real time. This type of tooling is crucial in distributed systems to provide deep insight into everything from user experience to backend data processing metrics and to identify potential issues before they become critical.

However, a big trend in the observability tooling space is “observability 2.0 tools”. This new generation of tools shifts the focus from traditional monitoring and identifying operational issues, to empowering developers to understand system behaviors and reveal ‘unknown unknowns’, throughout the entire SDLC.

In other words, this approach emphasizes addressing root causes and can pinpoint specific data points and interactions that might be missed by collecting high volumes of data and only drawing conclusions from the aggregate results: a shift toward a more proactive approach and using tooling that.

(6) Don’t forget about the people

The design is as important as the implementation. While it may take some additional upfront effort, there are significant benefits to defining a structured “design process.”

Usually, the design phase is synonymous with the dev team getting together to informally brainstorm, discuss the requirements of a product, and maybe draw a sketch of a feature on a physical or digital whiteboard. This often works well: they jump right into code, submit a pull request, someone reviews it, and then they merge it in production - happy days!

However, equally as many times something goes wrong: the reviewer realizes that they weren’t aligned on the desired outcome after all and they need to refactor. Or, maybe it’s now evident that a dependency wasn’t considered during the brainstorming. Or, maybe nothing seems amiss and they merge the request, but eventually, wider system issues pop up and it becomes even more painful to go back to provide a bug fix, or refactor a feature.

A lot of the negative consequences can be avoided by submitting a design review before jumping into code. In fact, making collaborative decisions in the ‘design phase,” ensures:

- Reduced development time (and time to market): You have a clear roadmap with a fully aligned understanding of the specs for each component from the outset. There is no wasted effort reworking the feature or fixing how the components fit together later on: everyone had the opportunity to weigh in, ask questions, make revisions, and agree before the development process began.

- Better software: Significantly reduced the need for ad-hoc fixes and hard-to-maintain code in the long run. You know where the potential bottlenecks are, and how to avoid adding technical debt. The SmartBear yearly State of Code Quality survey shows the same results every time: the best way to improve software quality is “reviewing” - making sure that your development, testing, and business teams are all on the same page and collaborating throughout the development lifecycle.

- More satisfied customers: Your team can work on multiple features in parallel, knowing that all the components will fit together cohesively. Ultimately, the end users of your product will have a better experience and more frequent releases.

We’re working on a better way to design, develop, and manage distributed software - sign up for free now.