Guide

Software Testing Strategies & Examples

Table of Contents

Software testing is the process of evaluating an application’s behavior against functional and non-functional requirements. It involves identifying bugs, assessing performance, validating functionality, and ensuring the software functions as expected in both intended and edge-case scenarios. While there is no one-size-fits-all approach to testing, certain strategies can help teams maximize the efficiency and efficacy of their testing efforts.

This article discusses six essential software testing strategies to follow when developing your application. Each approach addresses specific challenges developers face, such as catching bugs early, ensuring stability in dynamic codebases, and maintaining application quality as the system evolves. By implementing these strategies, software engineering teams can more effectively integrate testing into the development process and ensure fast and reliable software delivery.

Summary of key software testing strategies

This table summarizes the software testing strategies covered in this article.

| Strategy | Description |

|---|---|

| Test early and often | Perform testing frequently to catch bugs at an earlier stage and to maintain development velocity. |

| Automate testing where possible | Well-maintained automated test suites save costly rework by automatically catching and reporting bugs. |

| Include manual testing | While automated testing excels in handling repetitive, high-volume, or time-consuming tasks, manual testing should be employed strategically for exploratory testing, usability testing, and validating user flows that are impractical to automate. |

| Increase test coverage | Test as much of the code as practical to minimize the risk of missed bugs. While 100% test coverage is often unrealistic, teams should strike a balance between testing rigor, timelines, and other limiting factors. |

| Perform frequent regression tests | Automated regression tests ensure stability as the code base evolves. Regression tests should be included in CI/CD pipelines and maintained as the application evolves. |

| Improve testing over time | Enhance your testing strategy by implementing continuous testing in CI/CD, monitoring key metrics, configuring static analysis tools, and adding new testing types. |

Test early and often

Testing earlier in the development process saves time and reduces the cost of refactoring. Let’s contrast two different two-week release processes:

The first release process does not worry about testing until development work is done:

- Once the software developer has completed their feature, there are four days left before the release.

- The developer contacts the QA tester and provides a link to the ticket.

- The QA tester has four days to complete their testing.

- On the first day, QA asks clarifying questions as the ticket does not provide enough information. They ask how to access the new feature and the system's login information.

- On the second day, QA creates their manual test plan, which is approved by the product teams and the developer.

- On the third day, QA executes the tests in the test plan and creates bugs in their issue-tracking software. By the end of testing, they find three critical bugs and five more that were deemed non-critical by product teams. By the end of the day, the developer is made aware of these issues.

- The developer scrambles to fix the critical issues on the last day of the release cycle. QA must work closely with the developer to retest any deployed fixes. Only two of the three critical fixes are confirmed as fixed by the end of the day, and the third critical issue has been escalated to product teams to get more insights into how it will impact customers. All five non-critical issues are still in the code base.

The second release process performs testing activities alongside development work:

- The QA team is invited to participate in sprint planning alongside the engineering and product teams. This allows QA to ask clarifying questions early and start drafts of test plans.

- The development team uses unit tests as part of their development cycle, which allows them to catch some incompatibility issues early on.

- Once the developer has completed their feature, they run an automated regression test suite set up by the QA team. This test suite catches several issues. The developer fixes these issues before passing the work to the QA team.

- The QA tester has four days to complete their testing.

- On the first day, the QA tester goes back to the ticket from the planning session and opens their draft test plan. They tweak this test plan and send it for review. By the end of the day, it has been reviewed by engineering and product stakeholders.

- On the second day, the QA tester executes the test plan. They find one critical issue and two non-critical issues. The developer starts fixing these issues.

- By lunchtime on the third day, the developer has reported the issues as fixed. QA re-tests the code, and all fixes appear to work. The automated regression tests are run once again and succeed. The ticket is closed.

- On the fourth day, the QA tester uses any extra time to help teammates, refactor existing tests, or expand test coverage.

These contrasting examples highlight a few benefits that testing early and often can bring:

- Reduce last-minute scrambles: As seen in the second workflow, testing early catches issues at an earlier stage and reduces the time pressure that deadlines bring.

- Involve QA teams early: Collaborating with QA teams in sprint planning facilitates better communication between teams. This improved alignment reduces handoff times when transitioning tickets from development to testing.

- Developers are more involved in testing: When developers catch and fix issues through unit and regression tests, QA can focus on creating more complex test plans and improving automation.

- Less risk of critical issues at release: Continuous testing reduces the chance of releasing software with unresolved issues, leading to better overall application quality.

- Less technical debt: When bugs and issues are caught before they become embedded in the system, less technical debt is accumulated over time. This keeps the system robust and prevents costly rework down the line.

Automate testing where possible

As applications scale, manually testing all existing and new functionality becomes impractical and inefficient. Automation helps address this issue by enabling fast, consistent, and repeatable validation. This allows tests to be run more frequently, which reduces error rates and leads to better stability as the application evolves.

In general, the best tests to automate are those that perform repetitive and predictable testing tasks to save time and reduce human error. Here are some types of tests to consider automating:

- Unit tests: Unit tests allow developers to test individual code components for functionality. Unit testing is now a widely adopted process throughout the industry. The results of the 2023 annual survey conducted by JetBrains, designed to capture the developer community's landscape, stated that 63% of respondents used unit testing in their projects.

- Integration tests: These tests ensure that different modules or components of an application work together as intended and correctly communicate with external systems or services.

- End-to-end (E2E) tests: E2E tests validate complete user workflows, from start to finish, and ensure the entire application functions as expected in real-world scenarios.

- Regression tests: Regression tests are run after each code change to determine whether the change interferes with existing functionalities.

- Performance tests: These tests measure and evaluate application speed, stability, and scalability under load. They are usually performed using automated tools to simulate user load and interactions.

- UI tests: Tools like Selenium can be used to automate web browser interactions to test user interface elements and workflows.

- Cross-browser and OS tests: Tools like BrowserStack can be used to simulate cloud-based testing across multiple browsers and devices to ensure compatibility.

- AI testing: Typical AI testing tools help developers be more productive when writing test scripts. However, new leading-edge tools like Qualiti can analyze a website and generate original test cases in popular testing frameworks like Playwright.

Full-stack session recording

Learn moreCreating automated tests

Automated tests are written in a programming language, either the proprietary language of the testing tool you have selected or a language of your choice. Here is an example of some automated tests written in Python for Selenium, a popular framework for web and test automation:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

def test_login_with_valid_credentials():

# Initialize the Chrome driver

driver = webdriver.Chrome()

try:

# Navigate to the login page

driver.get('https://example.com/login')

# Test login with valid credentials

WebDriverWait(driver, 10).until(EC.presence_of_element_located((By.ID, 'username')))

username_input = driver.find_element(By.ID, 'username')

username_input.send_keys('user@example.com')

# Enter password

password_input = driver.find_element(By.ID, 'password')

password_input.send_keys('password123')

# Click the login button

login_button = driver.find_element(By.ID, 'loginButton')

login_button.click()

# Wait for the dashboard page to load

WebDriverWait(driver, 10).until(EC.title_contains("Dashboard"))

# Assertion to confirm successful login

assert "Dashboard" in driver.title, "Test Case TC-001: Login failed"

except Exception as e:

# Assert failure if any exception occurs

assert False, f"Test Case TC-001 failed with error: {e}"As you can see, the test script simulates an authentication flow for a user utilizing the Chrome web browser. The comments within the test script clarify its logic, making the script easily readable and simplifying script maintenance.

How to automate

While automation is one of the most effective ways to improve testing frequency, efficacy, and efficiency, writing and maintaining automated tests requires a time investment from both engineering and QA teams. To help streamline the automated testing process, we suggest keeping the following best practices in mind.

Start small and expand gradually

Start by automating unit and smaller integration tests to serve as a foundation and kickstart the automation process. Expand your test coverage gradually over time to get team members to buy into the process and ensure they do not become overwhelmed by balancing test automation work with their other priorities.

Allocate time for initial setup and maintenance

In addition to the initial effort to set up automated tests, you must also consider the maintenance costs of keeping automated test suites up to date. As your application evolves, your automated tests must evolve alongside it. Conduct test suite audits and dedicate time in sprints to address issues as they arise.

Automate less fragile test types

Test fragility refers to how easily a test breaks due to changes in its system, even if those changes are unrelated to the functionality under test. Unit tests are typically the least fragile type of test because they target specific code functions and are less impacted by changes to other parts of the codebase. Tests that cover more functionality, such as integration and E2E tests, require more maintenance as they rely on multiple components and–in many cases–environments. While it is helpful to include integration and E2E tests in an automated suite, less fragile unit tests should comprise the majority of the suite for better reliability and sustainability.

Consider costs

Setting up and running functional tests (such as unit, integration, and E2E tests) is typically less costly than performance tests because performance tests require infrastructure to simulate user load. Because of this, large-scale performance tests (e.g., load, stress, or soak tests) may need to be run less frequently or generate less load than would be seen in production.

Interact with full-stack session recordings to appreciate how they can help with debugging

EXPLORE THE SANDBOX(NO FORMS)

Investigate test failures

The goal of an effective software testing strategy is to create test suites where test failures have real meaning. In other words, a failing test should clearly signal to developers that something in the application deserves attention.

Smaller-scale unit tests are generally easier to investigate because their scope is small and their functionality is isolated. However, failures within more complex integration and end-to-end tests may involve tracing request paths that span multiple services and dependencies. In these cases, a test could fail for many reasons, such as:

- Genuine bugs in the codebase

- Flaws in the test design

- Unstable dependencies or infrastructure (containers, network services, CI/CD pipelines, etc.)

- Problems with test data (stale, corrupted, or missing mocks and fixtures)

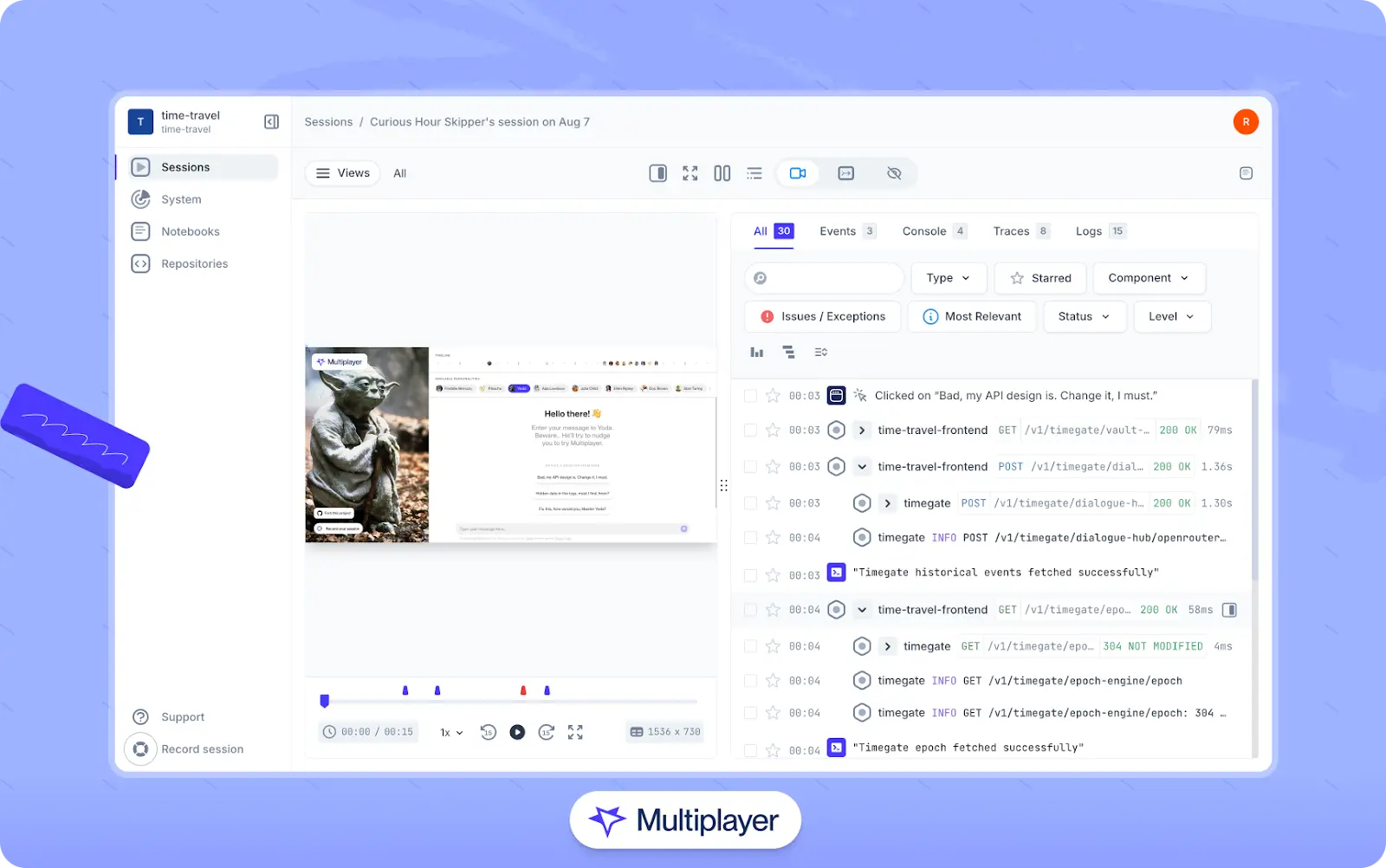

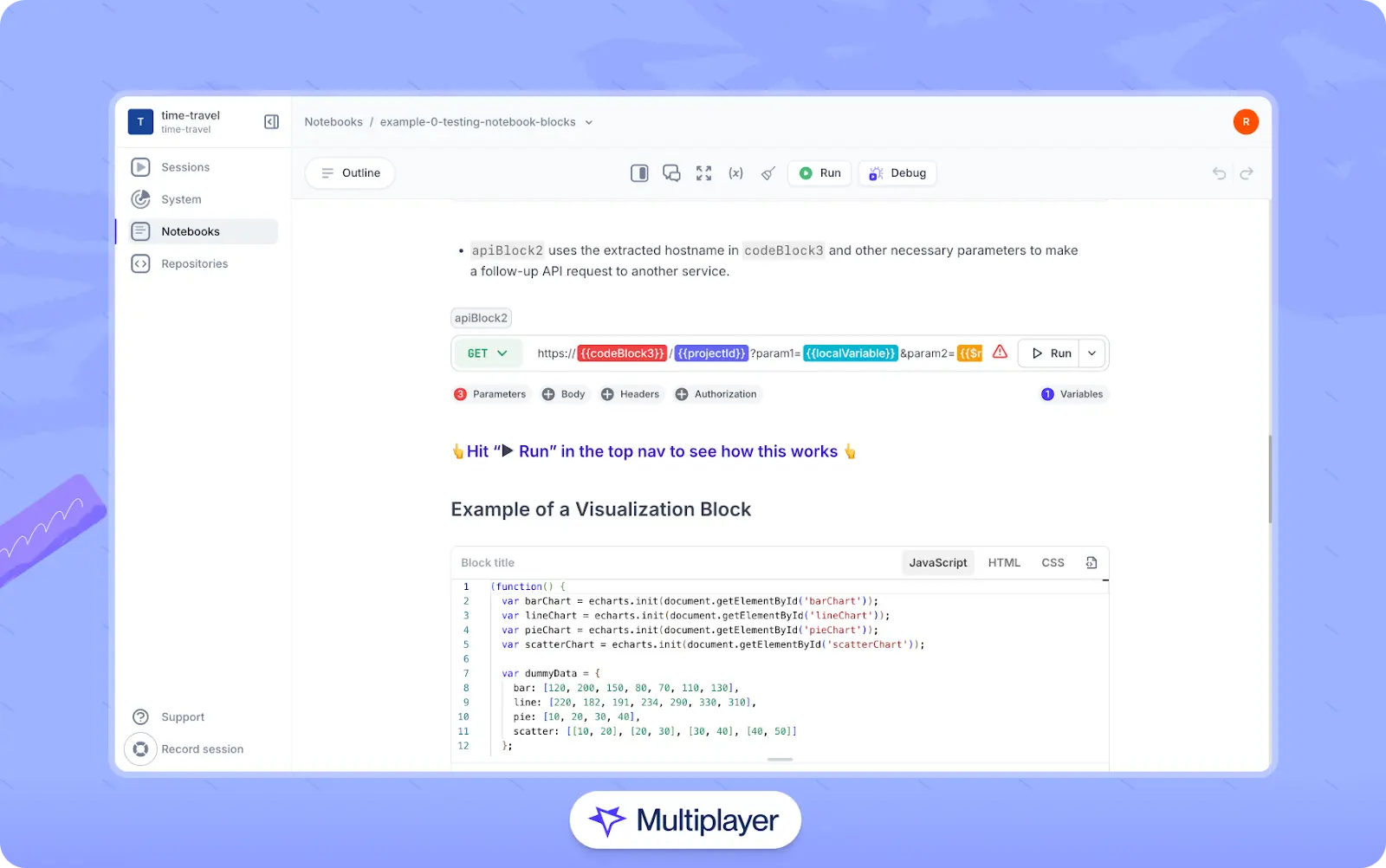

A typical resolution process would involve retracing steps, reproducing issues, and digging through logs to understand what actually happened. However, tools like Multiplayer’s full-stack session recordings can make investigating failures faster and more session recordings can make investigating failures faster and more reliable. Instead of piecing together fragments from test output and logs, developers can replay the entire sequence of events across the frontend, backend, and connected services.

Multiplayer’s full stack session recordings

Include manual testing

While many organizations prioritize automated testing for its efficiency, manual testing is still an important part of a robust testing strategy. Rather than viewing manual and automated testing as opposing strategies, the most effective test plans balance manual and automated testing to maximize test coverage. Consider manual testing in the following scenarios:

- Exploratory testing: In exploratory testing, testers manually engage with the application to uncover unexpected issues and understand its behavior in real-world scenarios.

- Usability testing: Usability testing assesses how easily users can navigate and engage with the application. While automated tools can be used to track click paths and metrics like time on page, this form of testing requires real users to interact with the application manually.

- Unique or complex scenarios: Evaluating complicated tasks or specific behaviors that require human intuition can be challenging to automate. In these scenarios, it may be more efficient to conduct manual testing.

It is also important to note that conducting manual testing can help expand automated testing efforts, as QA testers who perform manual tests may identify certain test cases as candidates for automation.

Creating manual test plans

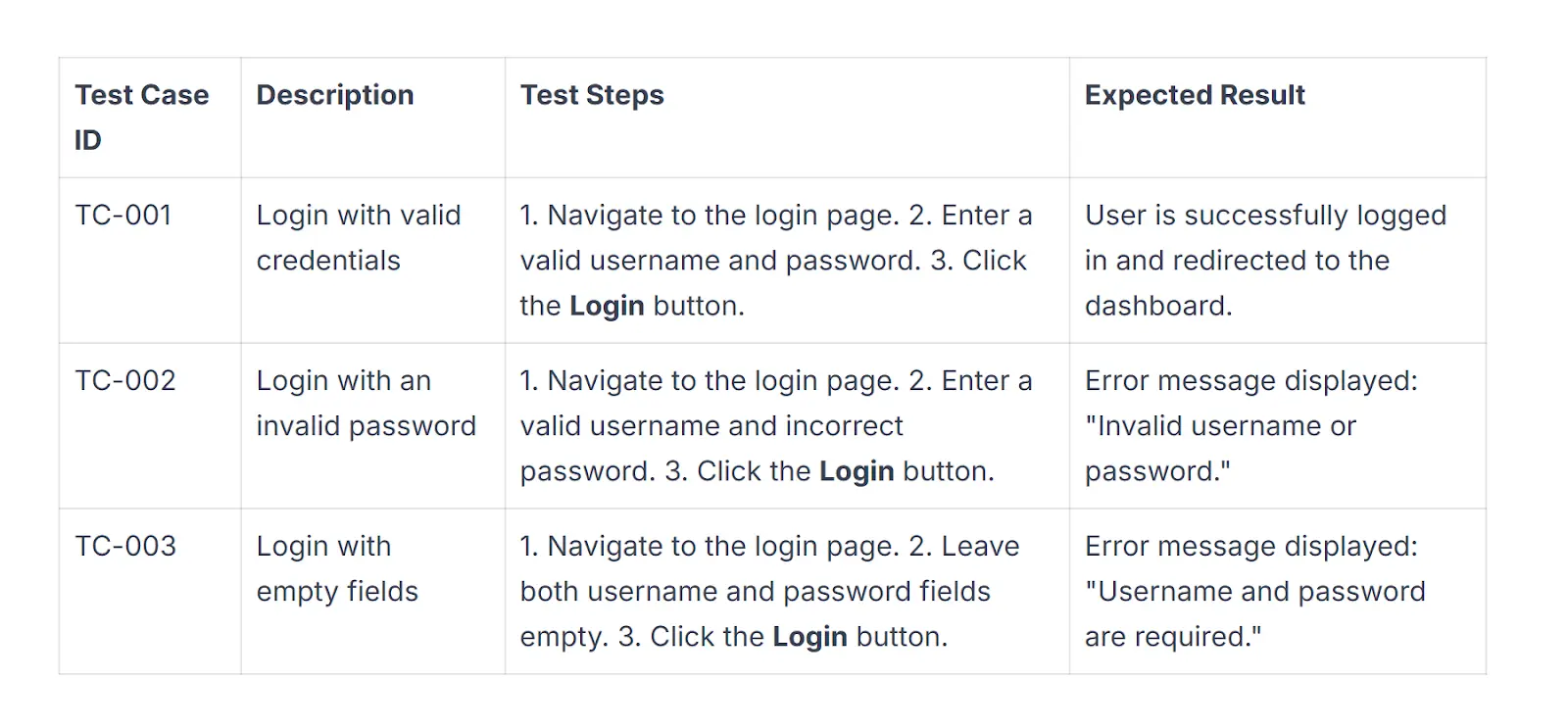

To encourage QA as a shared responsibility, test plans should be created and stored in a location that is easily accessible to testers, developers, and other stakeholders. For example, take a look at these test cases created using Multiplayer notebooks:

As you can see, each manual test case has the following:

- An ID so it can be referenced in test reports and other documentation

- A short description of the test objective

- Clear, detailed steps for test execution

- Expected results that clearly identify pass/fail criteria

In addition to these elements, manual test cases may include assumptions and preconditions (i.e., the user has already logged in or performed specific actions within the system) and information on test data (such as usernames, IDs, or configuration settings) needed for the test. When writing manual test cases, the goal is to create thorough, repeatable, and efficient tests for QA professionals to execute.

Increase test coverage

Test coverage is the amount of application code that is under test. Increasing code coverage reduces the risk of missed bugs. While achieving full test coverage is theoretically ideal, it is unrealistic given the scope of many organizations' software projects and resource constraints. This section explores some practical ways to strike a balance between thorough test coverage and other development priorities.

Set realistic coverage goals

Coverage goals should be practical and realistic, given the project's scope and nature. While 70-90% test coverage is widely regarded as a balanced target in software development, numerous factors may influence this number for different projects at different points in time. Such factors include the application's business sector, codebase size, current and historic levels of test coverage, and the percentage of development time that can be dedicated to QA.

Measure test coverage

Measuring test coverage ensures consistency. Different methods, such as line, branch, and function coverage, can be used to provide coverage metrics:

- Line coverage measures the percentage of lines of code executed during testing. For example, if a file has 100 lines, and tests cover 80 of them, the line coverage is 80%.

- Branch coverage tracks whether each branch in control statements like

if/elsestatements are tested. For example, for anifstatement with atrueandfalsepath, both paths must be tested to achieve 100% branch coverage. - Function coverage measures how many functions or methods have been called by tests. It aims to make sure all defined functions are tested at least once. For example, if a module has 10 functions and 8 are tested, function coverage is 80%.

To automate this work, use tools such as coverage.py for Python. These tools can generate reports and visualizations to help you identify untested code and improve overall code quality.

Track test coverage progress

Use the previously mentioned line, branch, and function coverage metrics to assess the thoroughness of code testing. Monitor your test suites' pass and fail rates to identify recurring issues and maintain reliability. Analyze your test reports to identify areas of frequent failure and use this information to increase test coverage in critical areas of your application. Many popular issue-tracking solutions provide reporting tools that make this possible.

One click. Full-stack visibility. All the data you need correlated in one session

RECORD A SESSION FOR FREEPrioritize your tests

Because 100% test coverage is usually unrealistic, it is important to prioritize tests based on key factors that maximize testing efficiency and effectiveness.

First, potential risk should guide your approach. Risk-based prioritization means focusing on areas of the codebase that are mission-critical, complex, or prone to errors. For instance, user workflows such as authentication and payment typically carry higher stakes than less integral operations. Historical data should also play a key role in this process; analyzing past bug trends can help identify areas that require more rigorous testing and ensure that previously problematic components receive sufficient attention.

It is also important to find an effective distribution of unit, integration, and end-to-end tests based on each test’s maintenance overhead and the amount of time and resources it takes to run. In general, teams should write the largest number of unit tests because they are relatively straightforward to write and maintain, offer rapid feedback on the core logic of the application, and can often be reused in different parts of the application. The next priority should be small-scale integration tests that cover interactions between relatively few components, while more extensive integration and end-to-end tests should be used strategically to cover the most critical user flows.

Track user journeys

While automated testing can closely simulate user activity, there is no substitute for insights into actual user behavior. Tracking user journeys allows you to see how real users interact with your software. They may interact with your system in unexpected ways or frequent journeys that are not currently prioritized in test suites. Identifying these behaviors informs testing efforts, from deciding which tests to prioritize to identifying important edge cases.

Leverage full-stack session recordings

A common barrier to expanding test coverage is the time and effort required to understand the system, design meaningful tests, and ensure they are reliable and maintainable. This is another use case for a tool like Multiplayer. Developers can capture recordings of end-to-end application behavior via a browser extension, in-app widget, or SDK that include frontend screens, user actions, backend traces, metrics, logs, and full request/response content and headers. Once the bug is captured, developers can use the tool to automatically generate a Multiplayer notebook from the recording that includes a runnable test script with API calls, payloads, and code logic.

Multiplayer notebooks

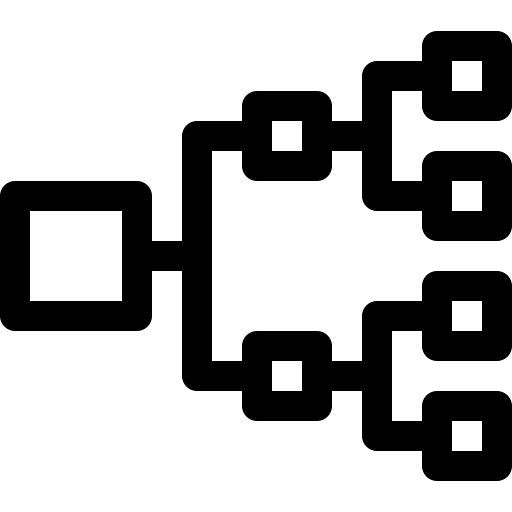

Perform frequent regression tests

Regression tests are run after code changes to ensure that new code changes do not introduce bugs. Regular regression testing is a key practice in agile environments to maintain software stability and quality over time.

When creating a regression test suite, consider the following:

- Integrate automated tests with CI/CD. Automated regression tests should be included in an organization's CI/CD pipeline, and failing these tests should block adding new code to the code base.

- Consider test duration and avoid unnecessary delays. Regression tests should run quickly enough to integrate smoothly into development workflows and run with each code change. If a regression test suite takes too long to run, it becomes impractical and slows down the development process. To cut down on the time taken to run tests, teams may conduct an initial round of smoke tests before a more comprehensive suite is run.

- Use manual tests if necessary. Regression tests are typically automated due to the repetitive nature of verifying unchanged parts of the application. However, manual regression testing may still be applied to critical features or areas where automation is not practical.

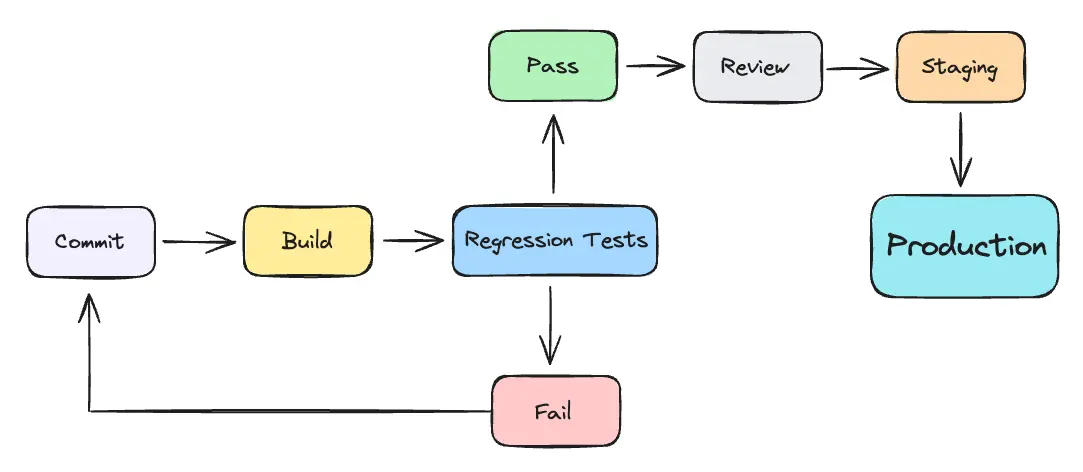

The diagram below shows the role of regression tests in an automated CI/CD flow:

Regression tests in the CI/CD process.

As you can see, if regression tests pass, the code is sent to the review stage and subsequently merged into production. If the tests fail, the developer must return to the code and create a passing build.

Regression test maintenance

Be cautious when maintaining your regression test suite. To prevent the suite from becoming too large, you must strike a balance between adding new tests, refactoring existing tests, and removing outdated tests. We recommend prioritizing the following factors in your regression test suite:

- Core business functionality: Test features that ensure important business features are working as expected, such as payment flows and authentication.

- Customer-facing features: Ensure high-visibility parts of the system and crucial user journeys are thoroughly validated.

- Compliance and security: Test areas handling sensitive data or that are subject to regulations.

- Complex integrations: Test parts of your system where integrations and critical data flows occur to ensure changes did not break any dependencies.

Developers within an organization will typically notify their product and QA stakeholders if a regression test suite has become too large. When this occurs, QA teams are tasked with either speeding up the automated tests–often by selecting more efficient tooling or parallelizing tests–or removing test cases entirely to make the overall test suite more efficient. High-performing CI/CD pipelines aim for 10-30 minutes for core regression suites that check the most critical features, although teams may run longer test suites after hours. This approach lets developers quickly verify that recent changes have not broken existing functionality while keeping QA environments available for other development and testing activities.

Stop coaxing your copilot. Feed it correlated session data that’s enriched and AI-ready.

START FOR FREEImprove testing over time

Fostering a culture of continuous improvement is highly beneficial in all aspects of software development, and testing is no exception. As your software evolves, your software testing strategies will likely need to be adjusted or expanded to maintain the quality of your application.

Here are some ways to improve testing over time:

- Implement continuous testing in CI/CD: Including continuous testing in your CI/CD pipelines ensures testing is performed regularly.

- Monitor metrics: Metrics provide insights into things like pass and fail rates, bug creation, and test execution times. These metrics can inform decisions about future testing strategies.

- Iterate on test plans: Review and optimize your plans regularly to keep them current. Remove redundant test cases to keep them aligned with your application's current functionality.

- Use automated tools: Many tools automate different parts of the software testing process. Stay current on market offerings and look for software and tooling that you can integrate into your current workflows.

- Use static analysis tools: Static analysis tools like linters identify potential errors and coding standard violations when writing tests. They can also highlight security vulnerabilities, such as exposing endpoints to the public or leaking key environment variables and tokens.

- Expand testing types: In addition to functional testing, include security, accessibility, performance, and other types of non-functional testing in your testing strategy.

Conclusion

Software testing streamlines the development lifecycle, reduces bugs, and helps maintain application quality. Key software testing strategies include testing early and frequently, automating where feasible, balancing manual and automated tests, and continually expanding testing types. Using a dedicated tool like Multiplayer elevates testing processes by providing teams with functionality that streamlines and automates test plan creation, captures end-to-end user sessions, and integrates with AI tools to help you debug and improve your application.

By following the practices in this guide, development teams can effectively integrate different types of manual and automated testing into their workflows and ensure the timely delivery of quality software.